“I get it. I empathise. I’m with you.” These are some of our simplest yet most profound phrases. They are an expression of our humanity, our need to connect meaningfully with others. They are also at the heart of one of the most exciting and potentially transformative challenges we face as deep tech innovators – building empathy into human-machine interactive systems.

The Human-Machine Understanding team here at CC is currently engaged in early-stage research into AI empathy. In this article, we plan to confront the fundamental questions that are driving and characterising the work. Namely, what exactly is an empathetic machine and why is it important for humans that we develop this? We’ll use examples from the business environment as well as the wellbeing space to illustrate our key points.

Let’s start right at the top by explaining why we believe the development of artificial empathy is so vital right now. The answer is clear. In recent months, the wider world has really woken up to the incredible potential of machine learning these transformative and disruptive AI-based technologies, machines are acquiring more sophisticated abilities, and partnering with to solve harder challenges. But as ever, excitement is tinged with concerns over safety, privacy, and ethics. Balances must be struck.

We must prepare for the emerging needs of this trend by developing empathetic AI that works comfortably and safely alongside us through deeper understanding. If machines understand our emotions, motivations and how these will affect our behaviour, they will be better placed to improve our performance and wellbeing, enhance productivity responsibly, and even allow us to achieve our sustainability goals.

At a business level, the potential is vast. CC believes that incredible opportunity awaits ambitious companies who are first to harness the promise of Human-Machine Understanding. By adopting to AI, computational models, and sensing, they will be adding human understanding We think people will flock to a new wave of emotionally and socially intelligent products and services. They’ll render today’s most sophisticated user interfaces lifeless, dull and totally redundant.

What is empathetic AI?

So, what exactly do we mean by an empathetic machine? As we know, empathy is being able to step into another’s shoes and understand things from their point of view. This underpins humanity’s ability to adapt to others and build stronger relationships over time.

Historically, machines have been designed without the capacity for empathy. Identifying ways to integrate insights from psychology into the next generation of AI-enabled machines, would allow us to take one step further in building adaptive machines that understand us. They will probably never fully embody human companionship, but they can certainly be better than they are today.

Let’s now dig a little deeper into the reasons why technologies with better human-machine understanding are so important. We interact with technology at personal, professional and societal levels every day – and for good reason.

Research and experience show that a hybrid human-machine team will outperform a human-only or machine-only team. From fields like cancer diagnosis to warehouse stocking, human strategic guidance combined with the tactical acuity and tirelessness of a machine is a winning combination.

The trend now is that these human-machine interactions are not just increasing in frequency, but also deepening in complexity. Machines fuelled by predictive analytics and language processing and generation – all hooked into a cloud ecosystem with edge devices – are helping us by spotting hidden trends, foreseeing challenges, and even proposing solutions.

Consequently, they’re able to partner with us to tackle tougher challenges. It follows then, that we must invest in technologies which enable us to direct and collaborate with machines – tapping their unique strengths to achieve our own goals. This is what brings us to the imperative of machines with empathy – machines that not only perform a task well, but also understand us and respond to our true needs, so that we can perform tasks well, together.

As well as emotional understanding, empathy also relates to the cognitive and social experiences of others. These are not isolated from each other, after all. Empathy allows us to adapt to each other’s needs and to eventually have a shared understanding of , what we are trying to do, and why we’re doing it. This shared mental model is key for us humans to be able to interact effectively with each other, in personal or professional settings alike.

Machines are not currently designed to do this. At best, they react to specific aspects of our behaviour, but do not try to infer what these behaviours mean or what mental processes have led us to exhibit them. This means that machines working with us have very limited context on how we are thinking or feeling about what we’re doing, why we’re doing it, and what is affecting our intentions and behaviour.

The burden of understanding, adapting and building an mental model is therefore fully on us. The machine will not try to meet us in the middle or resolve mismatches in our mutual understanding.

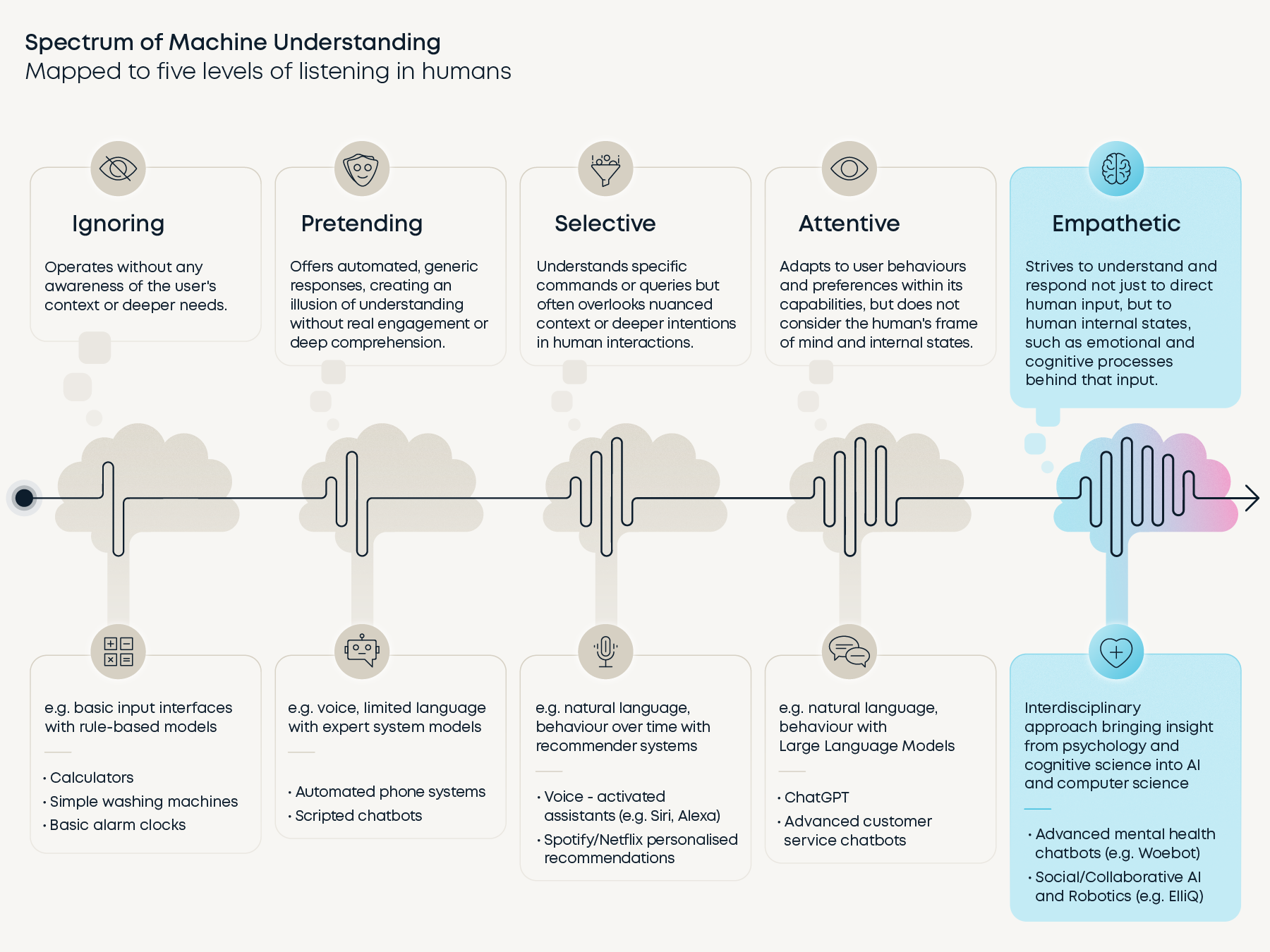

There are many ways to categorise levels of machine understanding in human interaction. You may be familiar with Stephen Covey’s Five Levels of Listening, as described in his book ‘The 7 Habits of Highly Effective People’. Here, we map levels of machine understanding of humans using the same categories. Machines, though, don’t all need to be empathetic. In fact, depending on the use case, each level on the spectrum might be perfectly fitting. A calculator doesn’t need empathy to get the job done, so the ‘ignoring’ level is fine. We have provided examples of machines at different levels, as well as the underlying technologies that could enable them. Note that the spectrum does not reflect a timeline. Technological innovation is applied across the board to make all types of machines perform better, regardless of their depth of human understanding.

An empathetic machine, on the other hand, will operate using two models – one related to the task it is working on, and the other of the human it is working with. These models will improve over time and through interaction – in the same way our mental models evolve as we work with each other – to eventually converge on a shared mental model. Machines with such a capability will revolutionise everything, from our working environments to our personal lives.

Imagine a collaborative assembly robot on a factory shop floor. Instead of going through a pre-choreographed task, where you as the user must adhere to the robot’s rhythm and actions, the robot observes your behaviour and adheres to you. It will monitor your behaviour as well as the environment to identify your intentions, preferences and needs. It can then support you as and when you need it to.

In the wellbeing space, such capability would allow machine companions to support our mental health and personal development through better understanding. The aim should not be to replace human therapists and psychologists, but to provide a reliable tool to be used in parallel to therapy or for support in initial triage, .

Only recently we took part in a study conducted by the University of Cambridge on using smart robots as wellbeing coaches in the workplace. The study, published at the 2023 ACM/IEEE Conference on Human-Robot Interaction, showed the potential of technology for promoting mental wellbeing in professional settings. The findings highlight the importance of the form of delivery, in this case the physical embodiment of a machine wellbeing coach, in how the exercises are perceived by human participants.

CC enthusiastically supports early-stage academic research as we firmly believe in laying strong scientific foundations for future advancements, offering insights and resources that can empower our work and that of other innovators and researchers.

The challenges of human centred AI

Foremost among the challenges we are facing in our research activities is understanding humans in context. Obviously, the key requirement for empathetic technology is that machines understand humans. They must be able to observe human behaviour to infer how we are thinking and feeling about the task at hand. Models of how our mental processes relate to our behaviour and how we can infer one from the other is a topic of active research in cognitive science, AI and affective computing.

While there are promising results in specific, limited scopes, developing a general model is a real obstacle. It is not made easier by the fact that understanding human behaviour, and building empathy, is only possible in context. We can understand the meaning behind each other’s actions through a combination of familiarity with human behaviour, and knowledge of the context of the observed behaviour.

The fact that I am familiar with human behaviour, in this case walking and moving between rooms, is not enough to understand what they are doing. Capturing such context and modelling how an environment evolves through interaction (that is, having a world model) is one of the hottest topics of research in AI and autonomous systems.

Focusing on specific tasks, where we can model the context, and observe human behaviour within it, allows us to create algorithms that can apply reasoning, or predict human behaviour, within the confines of that environment. There is also ongoing research into abstraction methods that allow the transfer of knowledge of certain behaviours from one context to another.

At CC we’re working through these challenges, considering the merits of these state-of-the-art solutions and exploring ways to extend them to increase their applicability to real-world problems.

Ethics and trust in empathetic AI

Now let’s turn to another key challenge, that of ethics and trust. Building empathetic machines will depend on various measures of human behaviour and activities. Deeper understanding relies on deeper communication and observation – something that cannot be achieved without raising concerns over privacy.

Beyond that issue, there will be ethical questions on endowing machine agents with higher agency, potentially to make decisions for us or to influence our decision-making process and behaviour. Aside from worries on malicious activity, the competence of the technology in responding appropriately to the human user, and what effect this might have on them, is a source of safety risks.

Technologists looking to make a positive impact in this space need to be acutely aware of these challenges and incorporate them into their solutions as early as possible. CC is tackling the issues head on. Our AI experts are formulating a robust approach to AI assurance, founded on strong guidance in areas such as safety, ethics, security and more. You can read more about ways to ensure responsible AI development here: AI assurance – protecting next-gen business innovation.

If you’d like to discuss any aspect of the topic, please reach out to us, Michelle Lim and Ali Shafti. It’ll be great to hear from you.